Chatbot with PaLM API

Firebase

Sep 12, 2023

Introduction

AI chatbots have become very popular recently, with this technology allowing users to communicate in real time with advanced AIs.

The Chatbot with PaLM API extension provides an out-of-the-box solution for communicating with and engaging in conversation with the Google PaLM API.

Google PaLM API

Google’s PaLM API is an additional API layer that connects to Google’s new PaLM Large Language Model (LLM). An LLM, or Large Language Model, is a type of artificial intelligence program designed to understand, generate, and respond to human language in a way that is coherent and contextually relevant.

Getting Started with the Chatbot with PaLM API extension

Before you can access a secure solution to PaLM APIs, ensure you have a Firebase project set up. If it’s your first time, here’s how to get started:

Once your project is prepared, you can integrate the firestore-palm-chatbot extension into your application.

Extension Installation

Installing the “Chatbot with PaLM API” extension can be done via the Firebase Console or using the Firebase CLI.

Option 1: Firebase Console

Find your way to the Firebase Extensions catalog, and locate the “Chatbot with PaLM API” extension. Click “Install in Firebase Console” and complete the installation process by configuring your extension’s parameters.

Option 2: Firebase CLI

For those who prefer using command-line tools, the Firebase CLI offers a straightforward installation command:

firebaseext:installgooglecloud/firestore-palm-chatbot --project=your_project_id

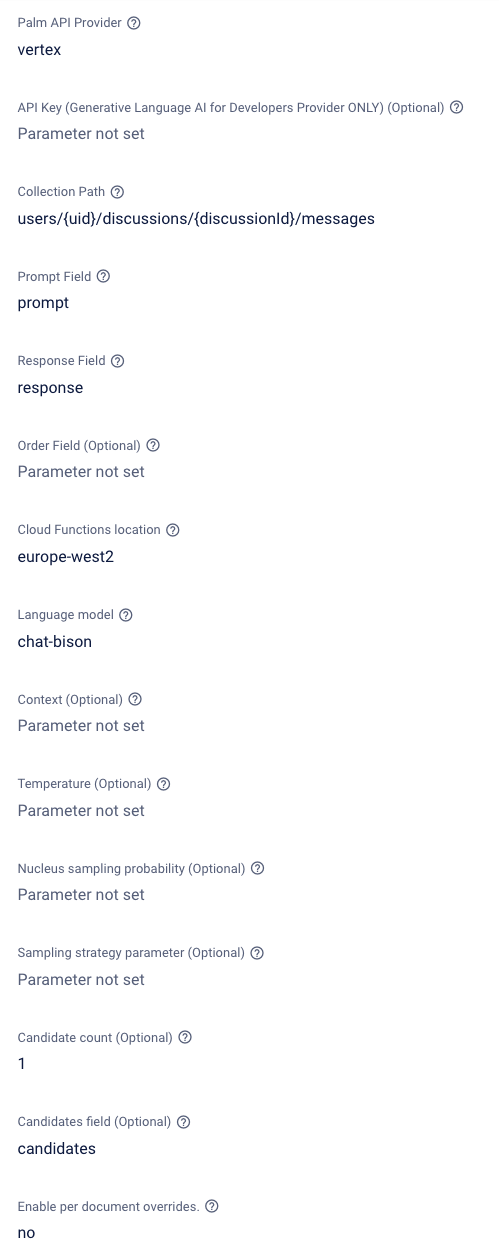

Configure Parameters

Configuring the “Chatbot with PaLM API” extension involves several parameters to help you customize it. Here’s a breakdown of each parameter:

- Palm API Provider:

- Description: There are two services which provide access to the PaLM API. Which would you like to use? If Vertex AI is selected, the service will be automatically enabled. If Generative Language is selected, you can provide an API key obtained through MakerSuite or your GCP console, or use Application Default Credentials if the Generative Language AI is enabled in your google cloud project.

- API Key (Generative Language AI for Developers Provider ONLY) (Optional):

- Description: If you selected Generative AI for Developers as your PaLM API provider, you can optionally choose to provide an API key. If you do not provide an API key, the extension will use Application Default Credentials, but will need the service enabled in GCP.

- Collection Path:

- Description: Specify the Firestore collection that holds the documents with the text you want to process for the Chatbot with PaLM API. This path guides the extension to the particular collection to observe.

- Example:

users/{uid}/discussions/{discussionId}/messages

- Prompt Field:

- Description: The field in the message document that contains the prompt.

- Response Field:

- Description:The field in the message document into which to put the response.

- Order Field:

- Description: The field by which to order when fetching conversation history. If absent when processing begins, the current timestamp will be written to this field. Sorting will be in descending order.response written.

- Example:

createTime

- Cloud Functions Location:

- Description: For optimized performance, choose the deployment location for the extension’s functions, ideally close to your database.

- Location Selection Guide

- Language model:

- Description: Which language model do you want to use?.

- Example: chat-bison

- More info about language Model

- Context:

- Description: Contextual preamble for the language model. A string giving context for the discussion.

- Temperature:

- Description: Controls the randomness of the output. Values can range over [0,1], inclusive. A value closer to 1 will produce responses that are more varied, while a value closer to 0 will typically result in less surprising responses from the model.

- Nucleus Sampling Probability:

- Description:If specified, nucleus sampling will be used as the decoding strategy. Nucleus sampling considers the smallest set of tokens whose probability sum is at least a fixed value. Enter a value between 0 and 1. A value closer to one means that the PaLM API will consider a larger subset of words when generating text. This can lead to more creative and diverse text, but also with a higher risk of errors.

- Sampling Strategy Parameter:

- Description: If specified, top-k sampling will be used as the decoding strategy. Top-k sampling considers the set of topK most probable tokens.

- Candidate Count:

- Description: The default value is one. When set to an integer higher than one, additional candidate responses, up to the specified number, will be stored in Firestore under the ‘candidates’ field.

- Candidates Field:

- Description: The field in the message document into which to put the other candidate responses if the candidate count parameter is greater than one.

- Enable per document overrides:

- Description: If set to “Yes”, discussion parameters may be overwritten by fields in the discussion collection.

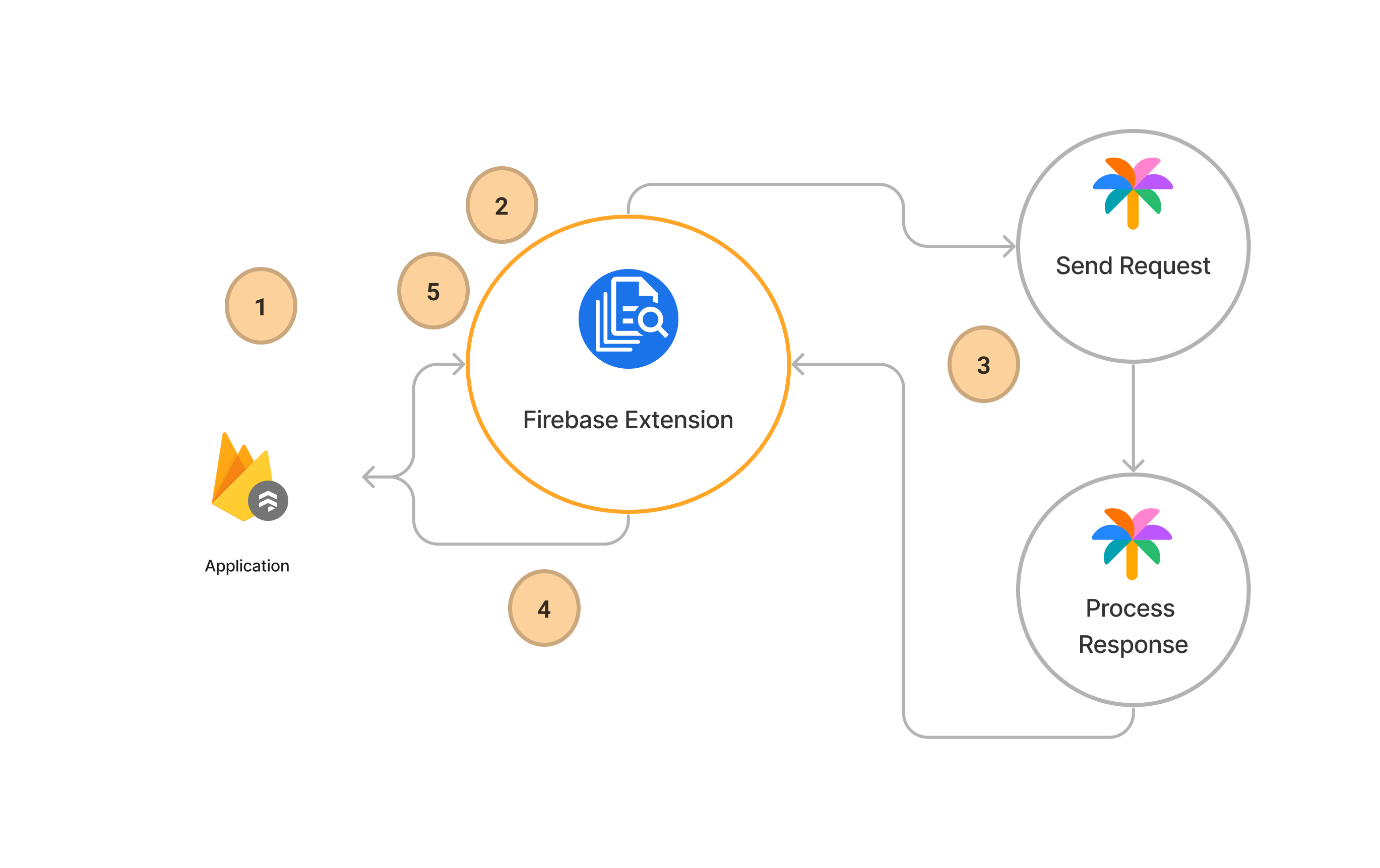

How it works

After installation and configuration, the extension will listen for any new documents that are added to the configured collection. To see it in action:

- Add a new document: By including a “prompt” field with text, the AI will be prompted for a response.

- Tracking status: While a response from the AI is generated, the extension will update the current Firestore document with a status field. This field will include PROCESSING, COMPLETED and ERRORED responses.

- Successful responses: When the AI has returned a successful response. A new field will be added containing a response.

- Maintaining context: Existing documents will contain a startTime field, this is generated by the extension. When you add a new document, the existing document will be sent along with the request as context. This allows the AI to generate a response, based on previous conversations.

- Regenerating a response: Updating the status field to anything over than “COMPLETED” will regenerate a response.

Steps to connect to the Chatbot with PaLM API

Before you begin, ensure that the Firebase SDK is initialized and you can access Firestore.

const firebaseConfig = {

// Your Firebase configuration keys

};

firebase.initializeApp(firebaseConfig);

const db = firebase.firestore();

Next, we can simply add a new document to the configured collection to start the conversation

/** Add a new Firestore document */

await addDoc(collection(firestoreRef, 'collection-name'), {

prompt: 'This is an example',

});

Listen for any changes to the collection to display the conversation.

/** Create a Firebase query */

const collectionRef = collection(firestoreRef, 'collection-name');

const q = query(collectionRef, orderBy('createTime'));

/** Listen for any changes **/

onSnapshot(q, (snapshot) => {

snapshot.docs.forEach((change) => {

/** Get prompt and response */

const { prompt, response, status } = change.data();

/** Update the UI status */

console.log(status);

/** Update the UI prompt */

console.log(prompt);

/** Update the UI AI status */

console.log(response);

});

});

Conclusion

By installing the Chatbot with the PaLM API extension, you can converse with the Google PaLM API by simply adding a Firestore document. Responses are highly configurable, allowing many customization options for developers.