How to Use the Detect Objects with Cloud Vision AI Firebase Extension

Firebase

Sep 9, 2023

Incorporating machine learning capabilities into mobile or web applications has become increasingly accessible and popular. Google’s Cloud Vision AI offers a powerful solution for object detection, allowing developers to integrate sophisticated image analysis into their applications.

With the help of Firebase Extensions, the integration process becomes even more streamlined.

Prerequisites

- Set up a Firebase project.

- Set up a Cloud Storage for Firebase.

- Upgrade your Firebase project to be on the Blaze plan. Learn more extension’s billing

Google Cloud Vision AI

Google Cloud Vision AI is a powerful image analysis tool that allows developers to integrate machine learning models into applications to understand the content of images. With its robust set of features, including object and face detection, text extraction, and landmark recognition, the API provides detailed insights that can be used for a wide range of applications, from content moderation to interactive marketing.

Users can easily interact with the API by sending image data through HTTP requests to the vision.googleapis.com endpoint and receiving structured information in the form of JSON responses.

While working with this API is possible, the Detect Objects with Cloud Vision AI Firebase Extension is helping to make the usage of the API seamless.

Detect Objects with Cloud Vision AI Firebase Extension

The Detect Objects with Cloud Vision AI Firebase extension leverages the “Object Localization (OBJECT_LOCALIZATION)” feature of Google Cloud’s Vision API to scrutinize and pinpoint objects in jpg or png images uploaded to Cloud Storage.

For every detected object, the API offers:

- A descriptive text that defines the object in understandable terms.

- A confidence score highlighting the API’s assurance of its detection.

- Normalized vertices [0,1] outline a bounding polygon, signifying the object’s relative position in the image.

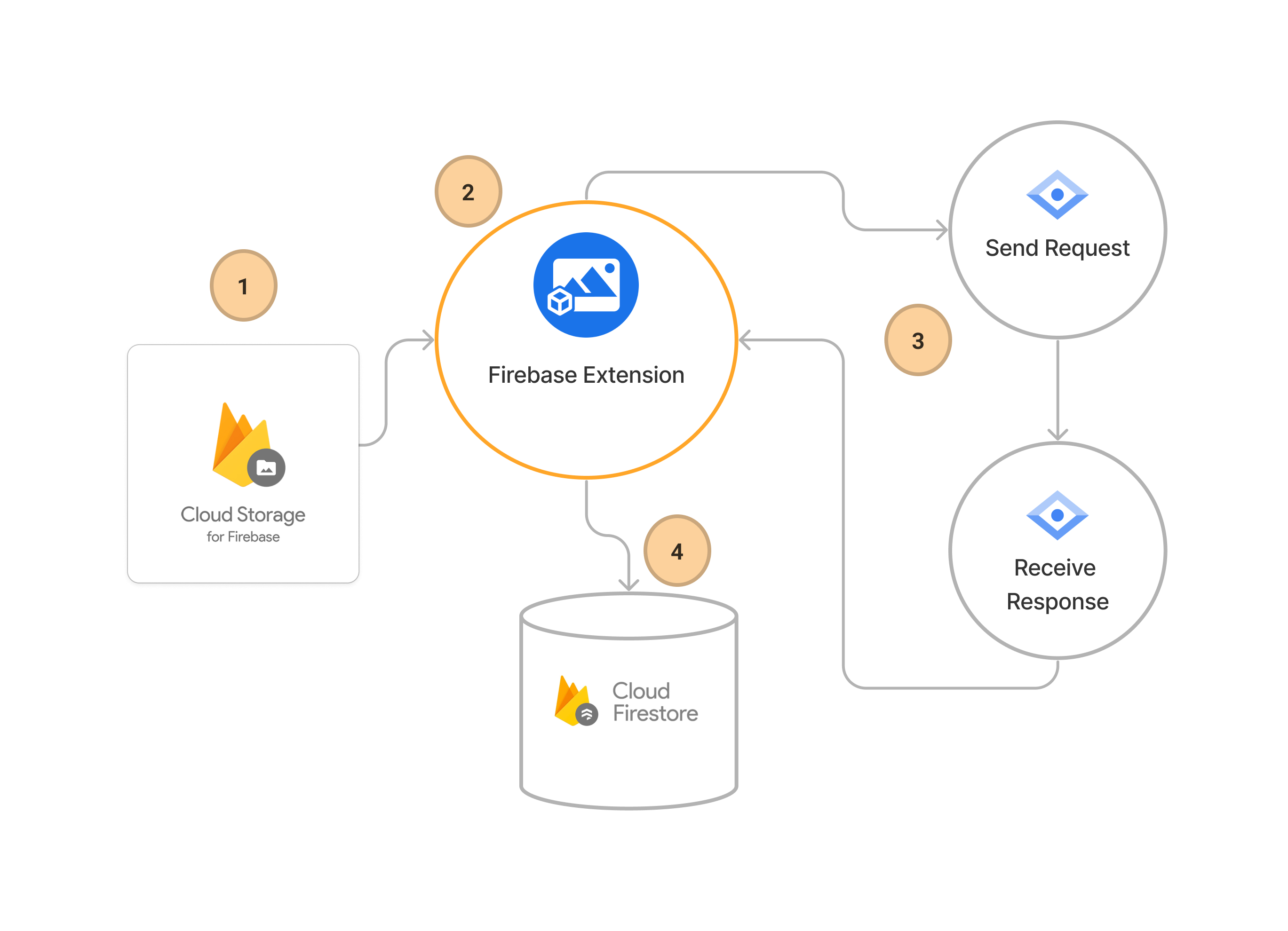

When setting up the extension, developers are directed to specify a Cloud Storage bucket for image uploads and a Firestore collection for recording the detections.

When an image is uploaded to the designated bucket a Cloud Function gets activated. This function, harnessing the Object Localization feature of the Vision API, identifies objects and documents the findings in Firestore, also capturing the complete gs:// path of the uploaded file in Cloud Storage.

Extension Installation

You can install the extension either through the Firebase web console or through its command line interface.

Option 1: Using the Firebase CLI

To install the extension through the Firebase CLI:

firebase ext:install jauntybrain/storage-detect-objects --project=projectId_or_alias

Option 2: Using the Firebase Console

Visit the extension homepage and click “Install in Firebase Console”.

Configure Extension

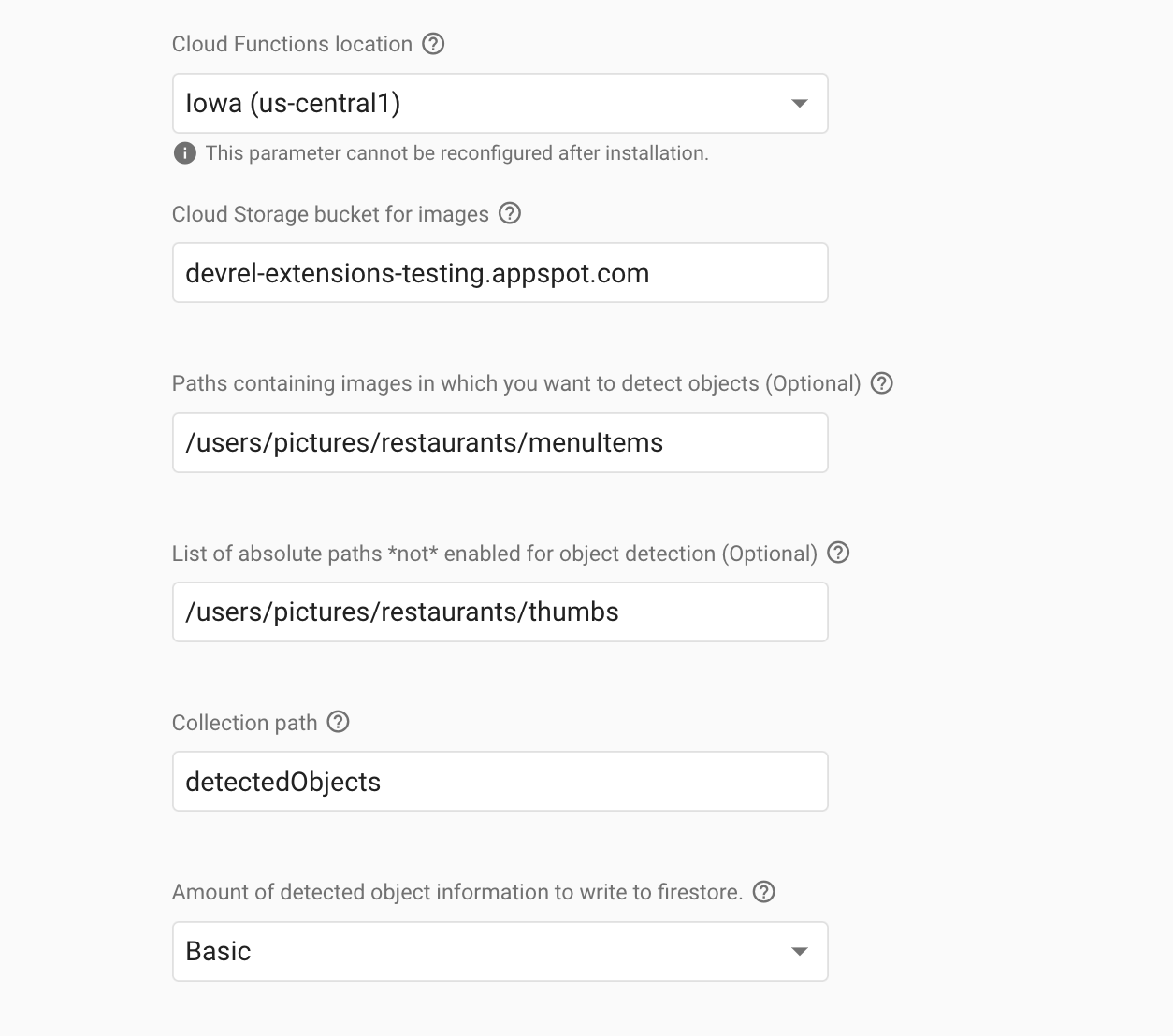

While configuring the extension, you can specify

Cloud Function Location: This is where you want to deploy the functions created for this extension. This parameter cannot be reconfigured after installation. Read more about Locations here.

Cloud Storage bucket for images: This is the Cloud Storage bucket where you want to upload images from which you want to extract objects.

Paths containing images in which you want to detect objects (Optional): Restrict storage-detect-objects to only process images in specific locations in your Storage bucket by supplying a comma-separated list of absolute paths.

- For example, specifying the path

/users/pictures/restaurants/menuItems

- For example, specifying the path

List of absolute paths not enabled for object detection (Optional): Ensure storage-detect-objects does not process images in specific locations in your Storage bucket by supplying a comma-separated list of absolute paths.

- For example, to exclude the images stored in the

/users/pictures/restaurants/thumbs

- For example, to exclude the images stored in the

Collection path: This is a path of Firestore collection where the extracted object list will be written.

Amount of detected object information to write to Firestore: This determines how much information about detected objects should be written to Firestore

Depending on the configuration mode set:

- In ‘basic‘ mode, only the detected objects’ names are added to the output.

- In ‘full‘ mode, the entire annotation for each detected object is added.

This allows for flexibility in choosing the granularity of object information to store in Firestore.

How does it work?

By integrating this extension into your Firebase project, you can implement object detection functionality in your applications without needing to manage backend infrastructure. Once integrated, the extension creates a Cloud Function that inputs an image and returns a list of detected objects and their corresponding information.

Detecting Objects

You can use any frontend technology to build an example to see how this extension works. What you need to do is:

- Create a page that enables the user to pick an image

- Upload this image to Cloud Storage through the Firebase SDK

- Listen to the data written in the Firestore

- Display what is detected

We built a Flutter app to demonstrate the Firebase extension in action for object detection, but if you follow the steps above you can use the extension in any platform for which there is a Firebase SDK or integration.

Here are the crucial steps you should use in your frontend application using Firebase SDK, too.

Initialize Firebase: In the Flutter app, initialize Firebase for your project.

await Firebase.initializeApp( options: DefaultFirebaseOptions.currentPlatform );Image Upload and Selection: Allow users to either select an image from their gallery or capture one using the camera. Once an image is selected or captured, upload it to Cloud Storage through Firebase. Ensure you keep track of your

imageNameto reference it, as we’ll use that to find the data for the image in Firestore.

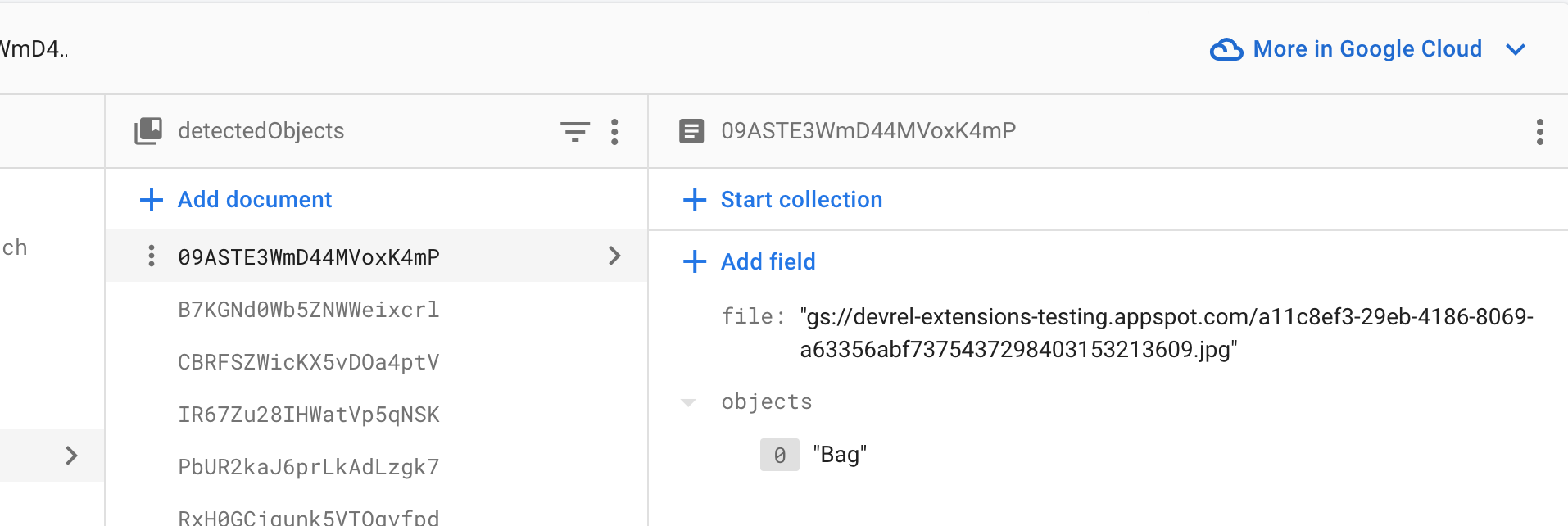

An Overview of Firebase Cloud StorageListen to Firestore: To monitor the results, keep an eye on Firestore. When you upload an image, the Firebase extension detects objects within the image and then records the results in a Firestore collection named

detectedObjects. The uploaded file’s name serves as its identifier. Check Firebase realtime updates for Cloud Firestore to learn more about snapshot listeners.final objectRef = FirebaseFirestore.instance .collection('detectedObjects') .where('file', isEqualTo: "gs://$YOUR_BUCKET/$imageName") .withConverter<ObjectData>( fromFirestore: (snap, _) => ObjectData.fromJson(snap.data()!), toFirestore: (obj, _) => obj.toJson(), );Display Detected Objects: After data has been written to Firestore, the app retrieves and displays the detected objects. This display is based on the filename from the Storage (gs://) path. Ensure you filter the documents to match the file name during this step.

StreamBuilder( stream: objectRef.snapshots(), )

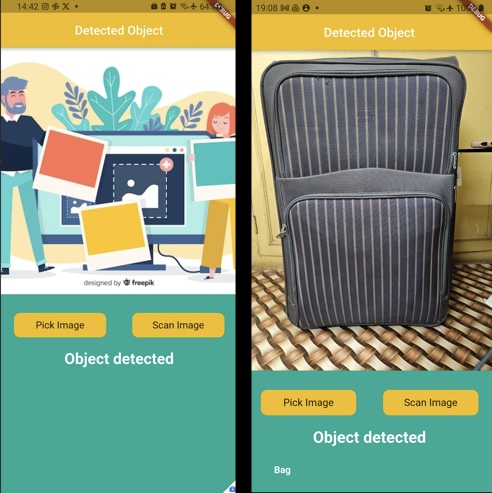

Result

Once the process is completed, the app displays a list of detected objects for the uploaded image.

If you have already selected full, you will have received the entire annotation for each detected object. The full represents the response for detected objects in images using the Google Cloud Vision API.

The full output provides details about the object detected in an image, such as the name of the object and the confidence score. In addition, it often includes normalized vertex coordinates that define the object’s relative position in the image.

The typical fields in a LocalizedObjectAnnotation might include:

name: The name of the detected object, e.g., “dog”, “car”, etc.score: Represents the confidence score of the detected object.boundingPoly: Normalized vertices of the detected object’s bounding polygon, which provides a rough outline of the object in the image.

The specifics and availability of these fields might change based on the version of the API and its updates.

Conclusion

This guide explored how to easily incorporate machine learning features into mobile apps using Google’s Cloud Vision AI and Firebase Extensions. By adhering to the highlighted steps, developers can smoothly add object detection capabilities, elevating their app’s user experience.

Firebase Extensions streamline this integration, connecting Firebase with cutting-edge technologies and empowering developers to craft more dynamic and feature-packed apps.