AI-Powered Video Labeling with Firebase Extensions

Firebase

Nov 2, 2023

Introduction

In today’s digital era, where video content plays a significant role in everyone’s life, effectively understanding and categorizing this content is crucial. That’s where the “Label Videos with Cloud Video AI“ Firebase extension comes into play.

This powerful tool leverages the capabilities of Google Cloud’s Video Intelligence API to automate the process of extracting labels from videos. This process isn’t just about identifying objects within a video; it’s about unlocking many possibilities for how video content can be utilized and understood.

Integrating this extension with Cloud Storage and the Cloud Video Intelligence API transforms your video content into a rich data source. The applications are vast and versatile, whether for enhancing user-generated content platforms or analyzing retail customer behavior.

Video Intelligence API

This extension’s core is Google’s Video Intelligence API, a robust service utilizing pre-trained machine learning models. These models are designed to recognize an extensive array of objects, places, and activities in stored and streaming video. This API stands out because of its exceptional quality, efficiency for everyday use cases, and continuous improvement as new concepts are introduced.

The Video Intelligence API is a testament to machine learning and AI advancements, providing a seamless way to process and analyze video data. Its ability to provide detailed insights into video content opens up new avenues for content moderation, search and recommendation systems, and even aiding visually impaired users by offering descriptive content analysis.

Getting Started

The first step in leveraging the Label Videos with Cloud Video AI extension is to have a Firebase project ready. If you haven’t already, follow the instructions:

Once your project is prepared, you can add the label videos extension to your application.

Extension Installation

You can install the “Label Videos with Cloud Video AI“ extension of the Firebase Console or the Firebase CLI.

Option 1: Firebase Console

Navigate to the Firebase Extension’s page and find the “Label Videos with Cloud Video AI” extension. Click on “Install in Firebase Console” and follow the prompts to set your configuration parameters.

Option 2: Firebase CLI

Alternatively, you can install the extension via the Firebase CLI with the following command:

firebase ext:install googlecloud/storage-label-videos --project=projectId_or_alias

Configure The Extension

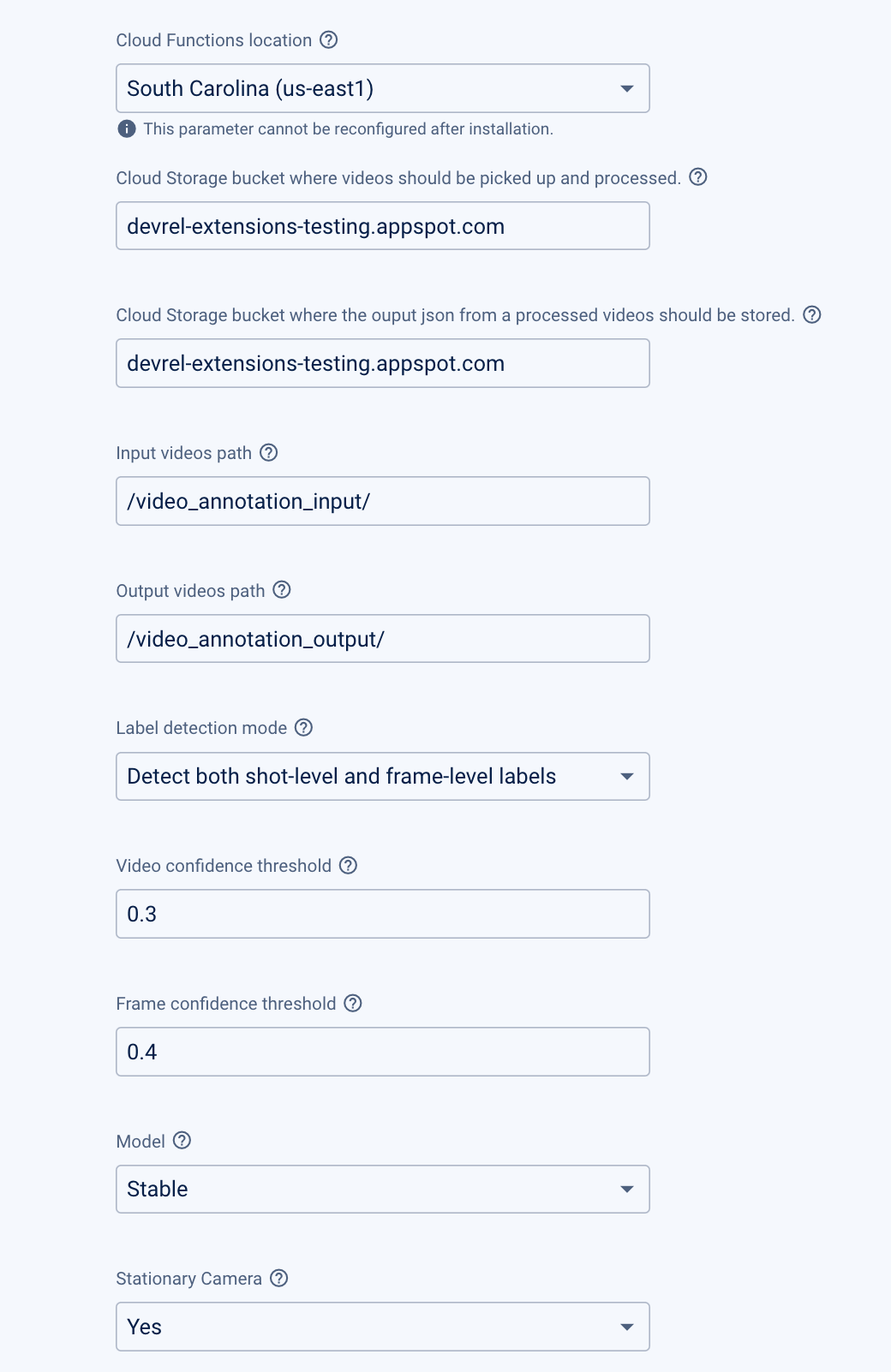

To optimize the “Label Videos with Cloud Video AI” Firebase extension for your specific needs, it’s essential to understand and set its configuration parameters appropriately.

Here’s a detailed look at each setting:

- Cloud Functions Location:

- Description: Select the cloud region where video annotation will occur. Choosing a location that aligns with your data storage and user base is essential.

- Example Options: You can read more about Cloud Function locations and Google Video API locations.

- Cloud Storage Bucket Where Videos Should Be Picked Up and Processed:

- Description: Specify the Cloud Storage bucket from which your videos will be picked up for processing.

- Example:

devrel-extensions-testing.appspot.com

- Cloud Storage Bucket Where the Output JSON from Processed Videos Should Be Stored:

- Description: Define the Cloud Storage bucket where the JSON outputs from processed videos will be stored.

- Example:

devrel-extensions-testing.appspot.com

- Input Videos Path:

- Description: Set a storage path in the input video bucket from which the extension should process videos.

- Example:

/video_annotation_input/

- Output Videos Path:

- Description: Indicate a storage path in the output video bucket where the JSON output should be stored.

- Example:

/video_annotation_output/

- Label Detection Mode:

- Description: Choose the type of labels to be detected, such as shot-level labels, frame-level labels, or both.

- Example Options:

- Detect shot-level labels (SHOT_MODE): In this mode, the API analyzes each shot or scene within the video and assigns labels to each shot. A ‘shot’ is a series of frames captured without interruption, like a single continuous take in a movie. This mode is useful for understanding the content and context of each scene in the video.

- Detect frame-level labels (FRAME_MODE): When selecting this mode, the API labels individual frames within the video. This is more granular than shot-level detection and can be useful for detailed analysis of specific moments or actions within the video.

- Detect both shot-level and frame-level labels (SHOT_AND_FRAME_MODE): This is a comprehensive mode where the API performs both shot-level and frame-level labeling.

- Video Confidence Threshold:

- Description: Set the confidence threshold for filtering labels from video-level and shot-level detections. Adjusting this can refine the accuracy of the detections.

- Example: 0.3 (Default value, range between 0.1 and 1.0)

- Frame Confidence Threshold:

- Description: Similar to the video confidence threshold, this setting applies to frame-level detections.

- Example: 0.4 (Default value, range between 0.1 and 1.0)

- Model:

- Description: Select the specific model for label detection. This choice can impact the types of labels detected and the overall accuracy.

- Example Options:

- Stable (builtin/stable): This option means the extension will use a stable and well-tested model. ‘Stable’ models are typically those that have been extensively used and validated for performance and accuracy. They are less likely to change or be updated frequently, offering consistency in label detection results over time.

- Latest (builtin/latest): Opting for the ‘Latest’ model means that the extension will use the most recent version of the labeling model available. These models may include the latest advancements in machine learning and potentially offer improved accuracy or the ability to detect a broader range of labels. However, they could be subject to more frequent updates or changes.

- Stationary Camera:

- Description: Indicate if the video has been shot from a stationary camera. This setting can improve detection accuracy for moving objects.

- Example Options:

- Yes (true), No (false). The default is set to true but changes to false if Label Detection Mode is set to Detect both shot-level and frame-level labels.

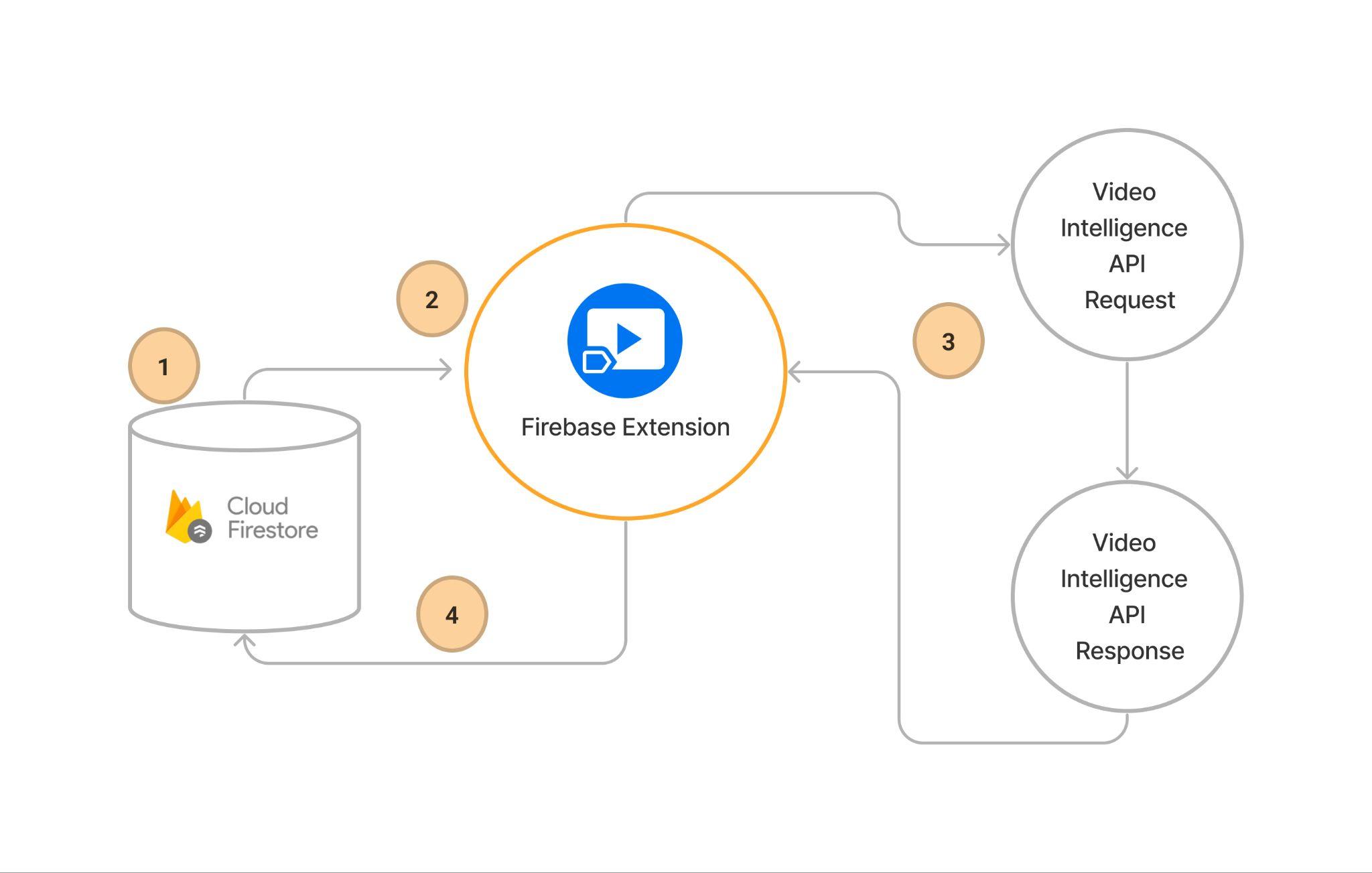

Workflow of the Extension

After setting up and configuring the “Label Videos with Cloud Video AI” Firebase extension, its operation is automatic and efficient from when video files are uploaded to your designated Cloud Storage path.

Here’s the streamlined sequence of events:

- File Upload:

- The process is initiated when you upload your video file to the Firebase Cloud Storage bucket. It’s important to ensure that your file is in one of the supported video formats (like .MOV, .MPEG4, .MP4, .AVI, or formats decodable by

ffmpeg) to enable proper processing. This step is crucial as it triggers the labeling workflow.

- The process is initiated when you upload your video file to the Firebase Cloud Storage bucket. It’s important to ensure that your file is in one of the supported video formats (like .MOV, .MPEG4, .MP4, .AVI, or formats decodable by

- Extension Activation:

- The extension constantly monitors your Cloud Storage bucket for new files in the specified path. As soon as a new video file is detected, the extension springs into action, sending the file to the Google Cloud Video Intelligence API for labeling.

- Label Extraction Processing:

- The Google Cloud Video Intelligence API analyzes the video content at this stage. Utilizing advanced machine learning algorithms, it examines each frame or shot of the video (depending on your configuration) to identify and label various elements like objects, activities, and scenes.

- Creating and Storing the Label Output:

- Once the labeling process is complete, the extension compiles the extracted labels into a JSON file. This file provides a detailed and structured representation of the video content. The extension then writes this JSON file back to Cloud Storage, specifically in the output path you’ve set during configuration. In addition, the output filename is designed to match that of the input video file, with alterations only in the file extension to indicate its JSON format. This approach facilitates easy correlation between the input videos and their corresponding labeled outputs.

Steps to Label Videos Using Firebase “Label Videos with Cloud Video AI” Extension

Before you begin, ensure the Firebase SDK is initialized and you can access Firebase Cloud Storage. Your application should have a UI component that allows users to select video files. Here’s a basic setup:

import { initializeApp } from 'firebase/app';

import { getStorage, getDownloadURL, ref, uploadBytes } from 'firebase/storage';

const firebaseConfig = {

// Your Firebase configuration keys

};

const app = initializeApp(firebaseConfig);

const storageRef = getStorage(app);

Also, you already have a UI that can handle selecting video files, for example:

<!-- HTML input element for file selection -->

<input type="file" id="video-upload" accept="video/*" />

JavaScript to handle the file upload

document

.getElementById('video-upload')

.addEventListener('change', handleFileUpload);

let file;

function handleFileUpload(event) {

file = event.target.files[0];

}

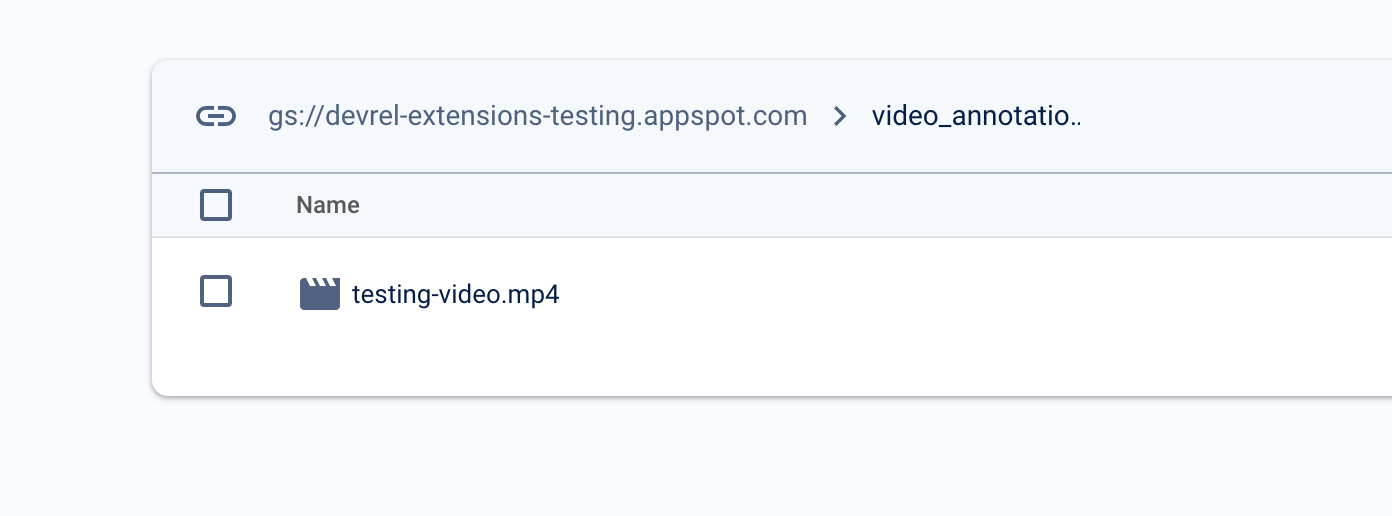

Step 1: Upload the Video File to Firebase Cloud Storage

Upload a video file to a specific path in Firebase Cloud Storage that the “Label Videos with Cloud Video AI” extension is configured to listen to.

const videoRef = ref(storageRef, `/video_annotation_input/${file.name}`);

uploadBytes(videoRef, file).then((snapshot) => {

console.log('Uploaded a video file!');

});

You can see the result below.

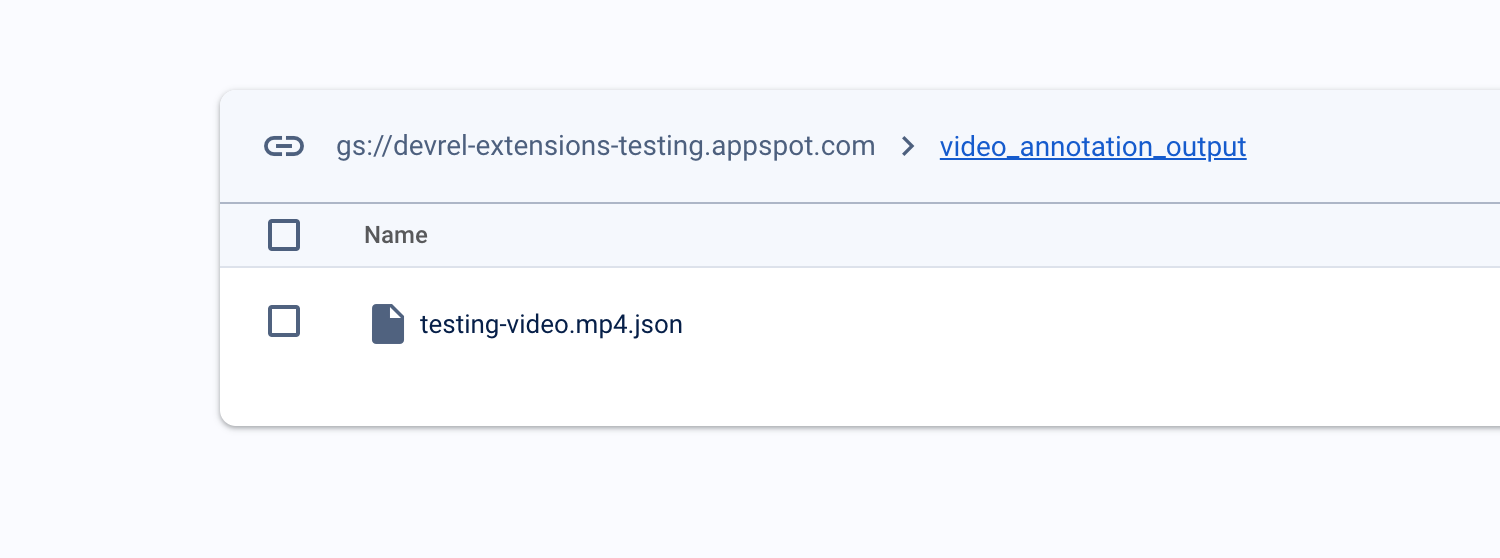

Step 2: Monitor the Labeling Process and Verify Output

The extension automatically processes the video file and outputs labels to Cloud Storage, where you have defined in the configuration video_annotation_output

Step 3: Retrieve and Use the Labeled Data

Once the labeling is complete, you can fetch the JSON file with the labeled data from Cloud Storage. This data can then be used in your application as needed.

const labelsFileRef = ref(

storageRef,

`/video_annotation_output/${file.name}.json`

);

getDownloadURL(labelsFileRef)

.then((url) => {

console.log(url);

fetch(url)

.then((response) => response.json())

.then((data) => {

console.log('Labeled data:', data);

const annotations = data.annotation_results[0];

const labels = annotations.segment_label_annotations;

labels.forEach((label: any) => {

console.log(`${label.entity.description} occurs!`);

});

});

})

.catch(function (error) {

console.error('Upload failed:', error);

});

For more information on JSON result response, check out the AnnotateVideoResponse API document.

CORS

If you fetch the JSON downloaded file in the browser and parse and display the data, you must remember to configure CORS on your bucket.

Configuring CORS for Firebase Cloud Storage

Cross-Origin Resource Sharing (CORS) is a security feature that controls how resources on a web server can be requested from another domain. To configure CORS for your Firebase Cloud Storage, you can use the gsutil command line tool.

Install gsutil: First, ensure you have gsutil installed. Installation instructions can be found here.

Set Up CORS Configuration File:

- Create a file named cors.json.

- Define your CORS policy. For example, to allow GET requests from your domain, your cors.json might look like this: Replace “https://example.com“ with your domain.

[ { origin: ['<https://example.com>'], method: ['GET'], maxAgeSeconds: 3600, }, ];Apply the Configuration:

- Run the following gsutil command, replacing “exampleproject.appspot.com” with your bucket name:

gsutil cors set cors.json gs://exampleproject.appspot.com

- This command applies the CORS settings to your Firebase Cloud Storage bucket.

For more complex CORS configurations, refer to the official documentation.

Conclusion

The “Label Videos with Cloud Video AI” Firebase extension simplifies extracting valuable data from video content. Automating video labeling opens up many opportunities for enhancing video content management, searchability, and analysis. With this extension, you can effortlessly turn your video content into a rich source of information and insights suitable for various applications.